I probably shouldn’t reply until you’ve read the paper since that might explain things better.

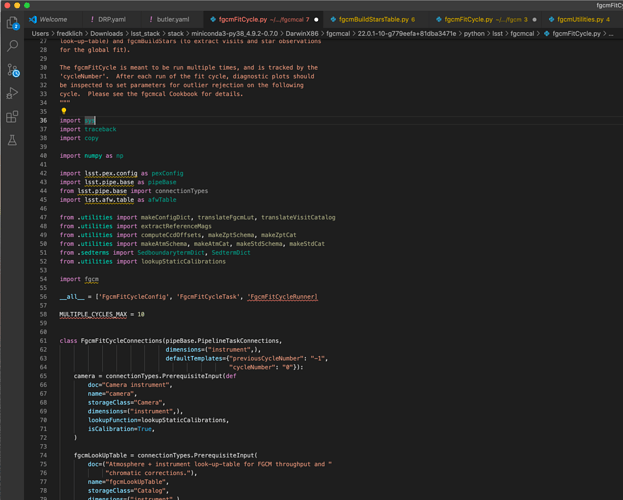

The butler is two things. It’s a file store with code that knows how to convert between python objects and files on disk. It’s a “registry” (aka database) that records the scientific relationships between all these files. The dataIds, collection names, and dataset types are all registry concepts and allow people to find their datasets without having to know where datastore wrote them or what format they are in.

This has nothing to do with the content of FITS files. Some dataset types might correspond to catalogs of sources or objects but butler doesn’t know anything about it. The fact that FITS files have some binary tables in them is not known to butler. It’s known to the Formatter class that has to read and write that file but that’s it. There is a clean distinction between butler managing the flow of data and what is in the data files.

“pipeline repository” is not a term we used. The butler repository stores the outputs from pipeline execution and allows pipelines to read in datasets from it.