FITS header compression has been discussed in the past both by the FPACK authors (Pence, White, & Seaman) and by the FITS technical working group. The default FPACK Rice compression outperforms gzip in compression ratio and speed for both lossless and lossy regimes applied to the pixels. It has always been recognized that use cases involving numerous small cutouts could benefit most from a native FITS header compression convention, otherwise the readable headers are a desirable feature. (A review of what FITS keywords are required might be the most efficient way to reduce the size of the header.)

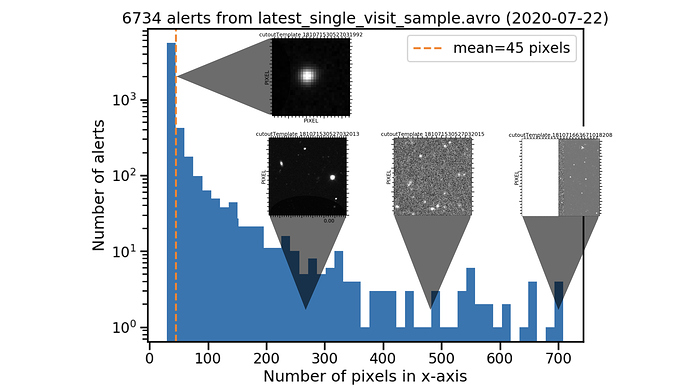

Compression should be viewed in a larger context than just storage space (see links from https://heasarc.gsfc.nasa.gov/fitsio/fpack/). Speed issues, both of compression / decompression, and for alerts of a multi-hop network transport timing budget, are at least as important. Indeed, remaining uncompressed is often an attractive option operationally, for instance if these cutouts will be repeatedly processed by brokers. If some fraction of the cutouts are significantly larger than the rest, they might be FITS tile-compressed leaving the rest uncompressed. That way all cutouts remain conforming FITS and libraries like CFITSIO can access the tiles efficiently.

If header compression remains of interest, there are a couple of basic options (with variations under the hood): 1) a legal FITS file can already be constructed by transforming the header keywords into a bintable and FPACK-compressing the table, and 2) it would be relatively trivial to gzip the fz-annotated header records concatenated with the Rice-compressed data records. Note the economies of scale from aggregating MEF headers into a single bintable.

LSST could also raise the issue of a header compression convention at the FITS BoF at the upcoming ADASS (or rather, raise the issue now and resolve it then). If higher compression ratios are required, one of these options combined with aggressive lossy FPACK compression could likely realize 10:1 or better ratios. The fundamental tradeoffs are in juggling both low-entropy headers and high-entropy pixels using the same toolkit.