There isn’t an “export all” that takes all the dimensions, datasets, dataset types, collections and other items and dumps them somewhere. In sqlite it’s easier to copy the datastore and sqlite file to a new location so it’s been a relatively low priority. Now that we have to write a command to migrate from the old integer dataset IDs to the new UUID form using sqlite then we will have to have something that looks very much like “export all”.

The low-level ButlerURI.transfer_from() API is currently not async-aware. Slow transfer speeds have been coming up recently so it’s definitely something I want to look at.

An alternative approach for raw ingest is is to do something like:

- Run

astrometadata write-index -c metadata on the raw data files.

- Transfer the raw files (and the index files) to S3 using standard s3 tooling and put them in a special bucket location.

- Now run

butler ingest-raws --transfer=direct REPO RAW-BUCKET – this will ingest the file using the full URI to them without transferring them explicitly into the butler datastore and it will read the index files to extract the metadata so it won’t have to read all the files.

Doing it this way means you can set up multiple butler repos in S3 (and also delete them) without having to continually upload the files from local disk.

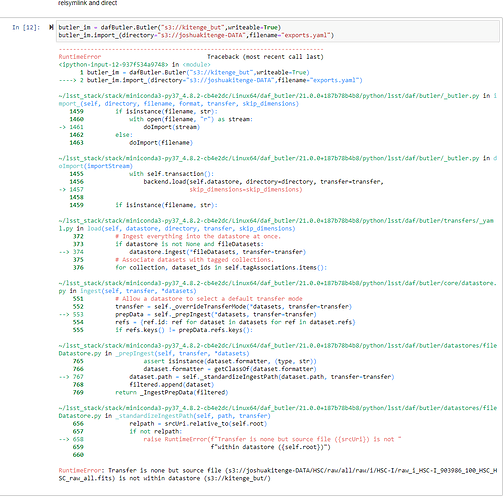

For an import/export you should be able to export from a repo to an S3 bucket and import from an S3 bucket. I think boto3 still downloads each file to the client as part of a copy so it’s not great performance (but maybe since I last looked at this boto3 has got cleverer).

Now for some comments on the web page:

- Maybe note that for butler subcommands once an instrument is registered you can refer to that instrument by the short name (eg for

write-curated-calibrations – currently butler convert will run that command automatically).

- Might be worth showing

butler --help in your example so that people can see that many of the abilities that are demonstrated later in the notebook can be done on the command line.

- In the “Importing data across” section you refer to ingest-raws command and not

import.

- For code examples you will sometime see

from lsst.daf.butler import Butler (especially since that is what the middleware programmers use for all our code and examples). Some butler examples from scientists seem to prefer using dafButler.Butler – I imagine some of that is history to distinguish from dafPersistence.Butler from Gen2 but for a pure Gen3 example I don’t think it’s necessary.

- You may want to explain that a DatasetRef is a combination of dataset type and dataId and can refer to an explicit dataset in a specific run (if

ref.id is defined).

- Not sure if you want to explain that

visit_system field that comes back. For HSC it doesn’t matter but for LSSTCam it can make a difference.

- I think the collections argument for queryDatasets might be defaulted to the one that you used to create the Butler so you might not need it in your examples.

- If you have an explicit

ref returned by queryDatasets you can use butler.getDirect() to get the dataset. This bypasses the registry and gets the thing directly using the ref.id. butler.get() always queries the registry and checks that the supplied dataset ref is consistent with what is in registry for that collection. This is because butler.get() has to be able to work with separate dataset type and dataId and wants consistency. The other thing butler.get() does is allow an explicit dataId to contain things like a detector name or exposure OBSID rather than a detector number and exposure integer.

- In cell 13, if you want to ensure that the source catalog you are using matches the calexp you want to use something like

butler.get("src", dataId=calexp_ref.dataId) – The current example looks like you are getting index 16 from the result and hoping that they match (which we can see they don’t because the visit in the dataId is different).

- In the more plotting section you then to use the

ref.dataId to get the associated calexp so I’m confused at the ordering. Why not use the calexp dataId to get the source and then reuse the calexp you already had?

There are a couple of recent commands that might interest you (they have Butler APIs to match):

-

butler retrieve-artifacts can download data files from a repository to a location of your choice (including S3 bucket). This lets you quickly just grab a few files (say because you want to send them to a collaborator).

-

butler transfer-datasets transfers datasets from one butler repo to another. Current limitation is that it does not create missing dimensions records or dataset types during the transfer but those are things we can think about adding later. It’s been developed to allow registry-free pipeline processing to occur.